Explanation#

- This article is based on v18.1.0 for analysis.

- The concept of interleaved will repeatedly appear when debugging useState; please refer to this link.

- The article is generally based on the code below for analysis, with some modifications depending on the situation.

- On 2022-10-14, a source code analysis regarding batching (involving task reuse [related] and high-priority interruption [mentioned in the code]) and a brief summary of the scheduling phase were added.

import { useState } from "react";

function UseState() {

const [text, setText] = useState(0);

return (

<div onClick={ () => { setText(1) } }>{ text }</div>

)

}

export default UseState;

TLNR#

- The dispatch of useState is an asynchronous operation.

- The implementation of useState is based on useReducer, or in other words, useState is a special useReducer.

- Each time dispatch is called in useState, a corresponding update instance is generated; multiple calls to the same dispatch will mount into a linked list of updates. Each update has a corresponding priority (lane).

- If the generated update is determined to need scheduling for an update, it will be scheduled for an update in the form of a microTask.

- During the update phase of useState, to quickly respond to high-priority updates, the update linked list will only process high-priority updates first; low-priority updates will be called for updates in the next render phase.

- For a summary of batching and the scheduling phase (scheduling → coordination → rendering), please refer to the end of the article.

useState in the mount scenario#

When debugging directly by setting breakpoints on useState, we will enter mountState.

useState<S>(

initialState: (() => S) | S,

): [S, Dispatch<BasicStateAction<S>>] {

···

// initialState is the initial value passed to useState

return mountState(initialState);

···

}

function mountState<S>(

initialState: (() => S) | S,

): [S, Dispatch<BasicStateAction<S>>] {

// Steps for generating hooks linked list, see [previous article](https://github.com/IWSR/react-code-debug/issues/2)

const hook = mountWorkInProgressHook();

if (typeof initialState === 'function') {

// The type declaration of initialState indicates it can also be a function

initialState = initialState();

}

// The memoizedState and baseState on the hook will cache the initial value of useState

hook.memoizedState = hook.baseState = initialState;

// During updates, an important structure will be mentioned

const queue: UpdateQueue<S, BasicStateAction<S>> = {

pending: null,

interleaved: null,

lanes: NoLanes,

dispatch: null,

lastRenderedReducer: basicStateReducer,

lastRenderedState: (initialState: any),

};

hook.queue = queue; // The hooks object corresponding to useState will have multiple queue properties

const dispatch: Dispatch<

BasicStateAction<S>,

> = (queue.dispatch = (dispatchSetState.bind(

null,

currentlyRenderingFiber,

queue,

): any)); // The focus of this article, a very important function

// Corresponds to const [text, setText] = useState(0); return value

return [hook.memoizedState, dispatch];

}

Thus, the analysis of useState in the mount phase is complete; there is very little to it.

Also, please pay attention to the basicStateReducer function.

function basicStateReducer<S>(state: S, action: BasicStateAction<S>): S {

return typeof action === 'function' ? action(state) : action;

}

Summary#

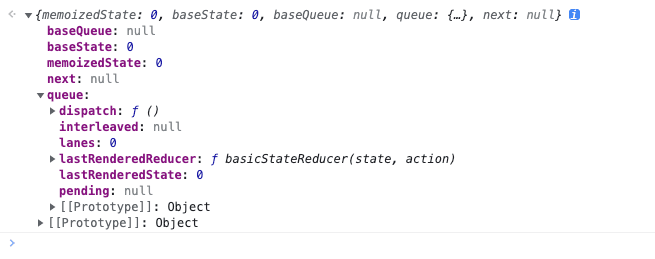

The useState in the mount phase serves primarily as an initializer; after the function call ends, we obtain the hooks object corresponding to this useState. The object looks like this:

Triggering the dispatch of useState#

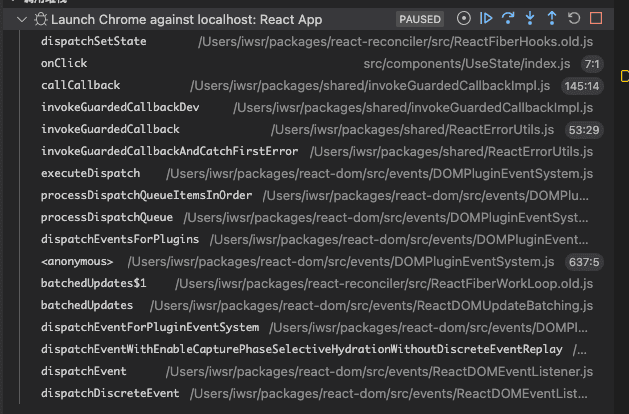

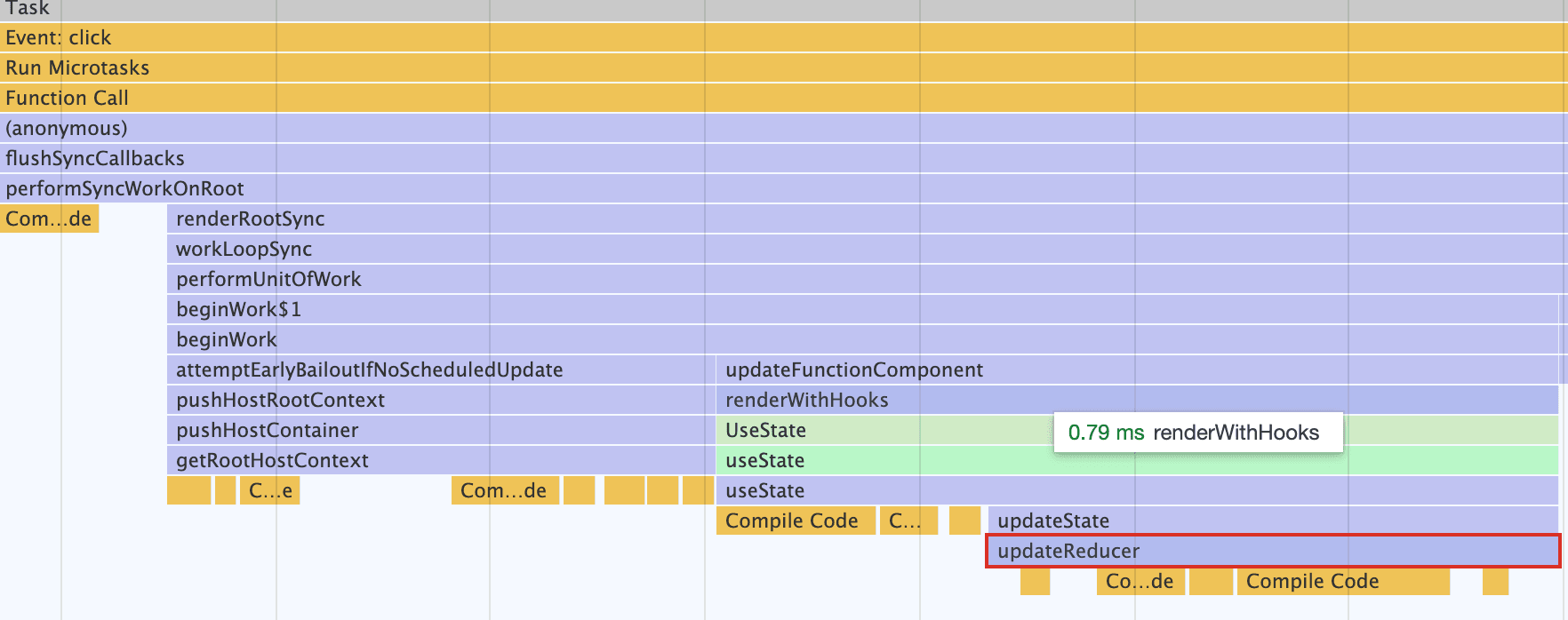

At this point, set a breakpoint on the dispatch returned by useState and trigger a click event. The following call stack is obtained:

dispatchSetState is a key function, and I want to emphasize it again.

function dispatchSetState<S, A>(

fiber: Fiber,

queue: UpdateQueue<S, A>,

action: A,

) {

/**

* Here, it can be understood as obtaining the priority of the current update

*/

const lane = requestUpdateLane(fiber);

/**

* Similar to the update linked list of setState

* Here the update is also a linked list structure

*/

const update: Update<S, A> = {

lane,

action,

hasEagerState: false,

eagerState: null,

next: (null: any),

};

/**

* Determine if it is a render phase update

*/

if (isRenderPhaseUpdate(fiber)) {

enqueueRenderPhaseUpdate(queue, update);

} else {

const alternate = fiber.alternate;

/**

* Determine if React is currently idle

* For example, after the first call to dispatch, fiber.lanes will not be NoLanes

* Therefore, the second call to dispatch will not enter the eagerState evaluation for the update instance

*/

if (

fiber.lanes === NoLanes &&

(alternate === null || alternate.lanes === NoLanes)

) {

// The queue is currently empty, which means we can eagerly compute the

// next state before entering the render phase. If the new state is the

// same as the current state, we may be able to bail out entirely.

const lastRenderedReducer = queue.lastRenderedReducer;

if (lastRenderedReducer !== null) {

let prevDispatcher;

const currentState: S = (queue.lastRenderedState: any);

/**

* Calculate the expected state and stash it in eagerState

* The logic inside lastRenderedReducer is as follows

* Check if the current action is a function

* After all, there are calls like setText((preState) => preState + 1)

* If it is a function, pass currentState and call action to get the computed value

* If not, for example, in calls like setText(1), just return action directly

*/

const eagerState = lastRenderedReducer(currentState, action);

// Stash the eagerly computed state, and the reducer used to compute

// it, on the update object. If the reducer hasn't changed by the

// time we enter the render phase, then the eager state can be used

// without calling the reducer again.

update.hasEagerState = true; // According to the comment, to prevent repeated computation by the reducer

update.eagerState = eagerState;

/**

* Check if the values are equal, shallow comparison ===

*/

if (is(eagerState, currentState)) {

// Fast path. We can bail out without scheduling React to re-render.

// It's still possible that we'll need to rebase this update later,

// if the component re-renders for a different reason and by that

// time the reducer has changed.

// TODO: Do we still need to entangle transitions in this case?

/**

* According to the comment, if the value hasn't changed, there's no need to schedule React to re-render

* The function below just handles the structure of the update linked list

*/

enqueueConcurrentHookUpdateAndEagerlyBailout(

fiber,

queue,

update,

lane,

);

return; // Since there's no need to schedule, the function is interrupted here

}

}

}

/**

* Because the update running here is considered necessary to be re-rendered on the page

* enqueueConcurrentHookUpdate is different from enqueueConcurrentHookUpdateAndEagerlyBailout

* It will call markUpdateLaneFromFiberToRoot

* This function will mark the lane of the current update from the fiber node where the update originated, layer by layer to its parent fiber's childLanes (for example, fiber.return -> fiber.return.return), until the root.

* The purpose of calling markUpdateLaneFromFiberToRoot is to prepare for scheduling in scheduleUpdateOnFiber

*/

const root = enqueueConcurrentHookUpdate(fiber, queue, update, lane);

if (root !== null) {

const eventTime = requestEventTime();

/**

* Enter the scheduling process (the analysis of scheduleUpdateOnFiber has been updated at the bottom)

* However, in v18, it will be registered as a microTask

* In v17, it was registered into the scheduler with the corresponding priority

*/

scheduleUpdateOnFiber(root, fiber, lane, eventTime);

/**

* An unstable function, roughly handles lanes, a bit difficult to understand, not analyzed

*/

entangleTransitionUpdate(root, queue, lane);

}

}

markUpdateInDevTools(fiber, lane, action);

}

Summary of useState's dispatch#

For the dispatch of useState, each call to dispatch generates a new update instance, and this instance will be mounted to its hooks linked list. However, the new value generated after calling dispatch will undergo a shallow comparison with its original value (currentState) to determine whether this update needs to be scheduled. In version 18, this scheduling task exists in microTasks, so it can be considered that the dispatch of useState is an asynchronous operation. Below is a simple example to verify this.

import { useState } from "react";

function UseState() {

const [text, setText] = useState(0);

return (

<div onClick={ () => { setText(1); console.log(text); } }>{ text }</div>

)

}

export default UseState;

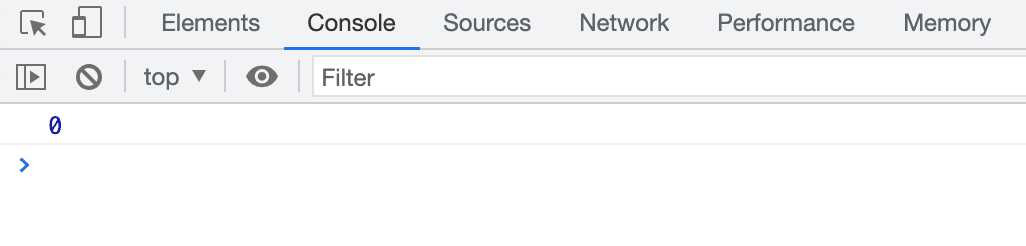

The result obtained after clicking:

Its call stack shows microtask.

useState in the update scenario#

Next, we will slightly modify the original debugging code by adding a dispatch; the new debugging code looks like this.

import { useEffect, useState } from "react";

function UseState() {

const [text, setText] = useState(0);

return (

<div onClick={ () => {

setText(1);

setText(2); // Set a breakpoint here

} }>{ text }</div>

)

}

export default UseState;

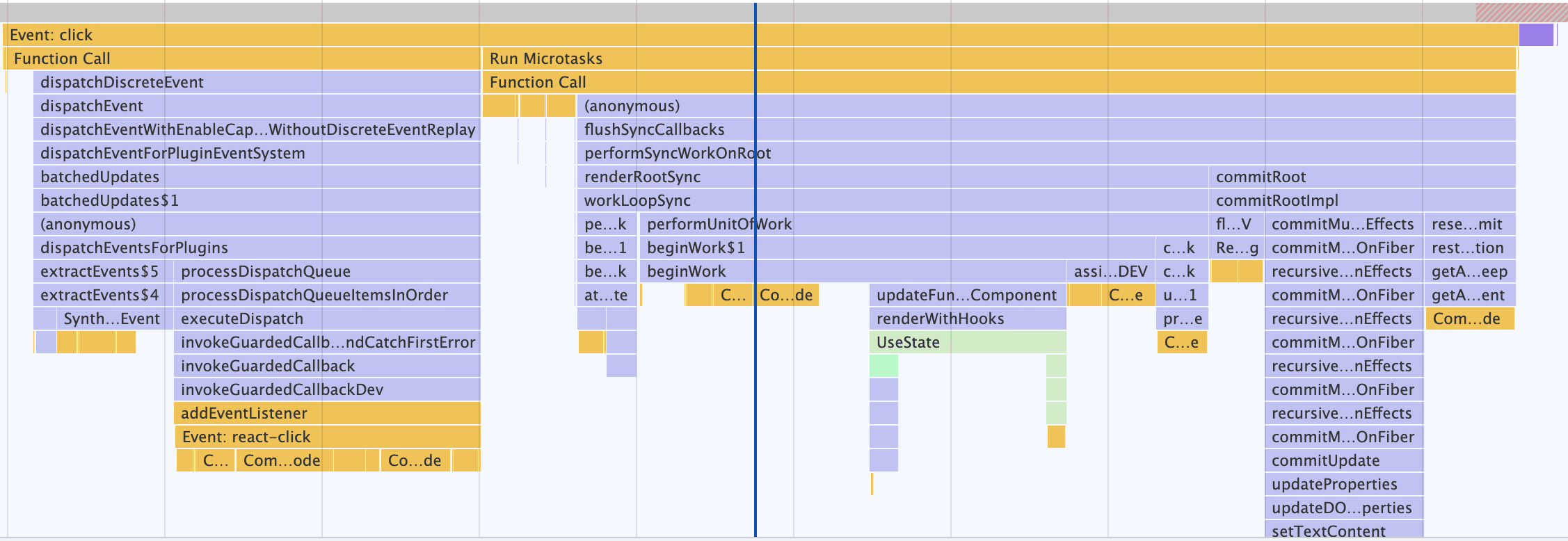

Recording the performance of the entire page looks like this:

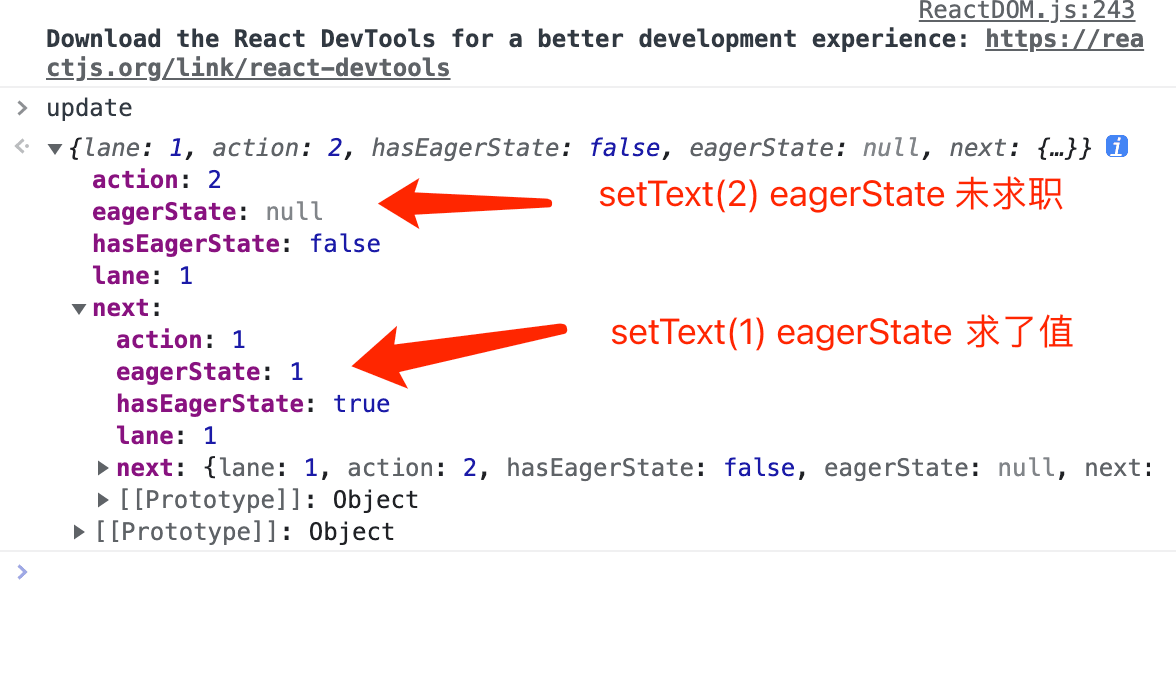

By the way,

During the second dispatch, the condition fiber.lanes === NoLanes will not be satisfied, so it directly jumps to enqueueConcurrentHookUpdate (see the code of dispatchSetState for details).

After connecting the update linked list in enqueueConcurrentHookUpdate, we will obtain the following structure of a circular linked list:

Returning to the main topic, during the update phase of useState, the updateReducer function inside updateState will be called (which is the mount version corresponding to useReducer).

function updateReducer<S, I, A>(

reducer: (S, A) => S,

initialArg: I,

init?: I => S,

): [S, Dispatch<A>] {

/**

* This function also appears in use(Layout)Effect; its main function is to

* simultaneously move the WIP hooks and current hooks pointer forward by one position

* For detailed comments, see [React Hooks: Appendix](https://github.com/IWSR/react-code-debug/issues/4)

*/

const hook = updateWorkInProgressHook(); // WIP hooks object

const queue = hook.queue; // update linked list

if (queue === null) {

throw new Error(

'Should have a queue. This is likely a bug in React. Please file an issue.',

);

}

// In the useState scenario, lastRenderedReducer is basicStateReducer — the reducer preset in mountState

queue.lastRenderedReducer = reducer;

const current: Hook = (currentHook: any);

// The last rebase update that is NOT part of the base state.

let baseQueue = current.baseQueue;

// The last pending update that hasn't been processed yet.

// Get the pending state update that hasn't been computed

const pendingQueue = queue.pending;

/**

* Organize the update queue to prepare for subsequent updates

*/

if (pendingQueue !== null) {

// We have new updates that haven't been processed yet.

// We'll add them to the base queue.

// Connect pendingQueue with baseQueue

if (baseQueue !== null) {

// Merge the pending queue and the base queue.

const baseFirst = baseQueue.next;

const pendingFirst = pendingQueue.next;

baseQueue.next = pendingFirst;

pendingQueue.next = baseFirst;

}

current.baseQueue = baseQueue = pendingQueue;

queue.pending = null;

}

/**

* The processed baseQueue contains all update instances

*/

if (baseQueue !== null) {

// We have a queue to process.

const first = baseQueue.next;

let newState = current.baseState;

let newBaseState = null;

let newBaseQueueFirst = null;

let newBaseQueueLast = null;

let update = first;

do {

const updateLane = update.lane;

/**

* Filter out updates included in renderLanes

* This step is related to bitwise operations

* If updateLane belongs to renderLanes, it indicates that the current update's priority is urgent

* and needs to be processed; otherwise, it can be skipped,

* similar logic also exists in the update linked list of class components (not detailed here)

* However, here it is a ! operation, so it is all reversed

*/

if (!isSubsetOfLanes(renderLanes, updateLane)) {

// Priority is insufficient. Skip this update. If this is the first

// skipped update, the previous update/state is the new base

// update/state.

/**

* Entering this logic means that the update is not very urgent and can be processed slowly

* However, this does not mean it will not be processed; it will still be recalculated from the skipped updates when idle

* Therefore, the skipped update instances need to be cached

*/

const clone: Update<S, A> = {

lane: updateLane,

action: update.action,

hasEagerState: update.hasEagerState,

eagerState: update.eagerState,

next: (null: any),

};

/**

* Add the skipped update to newBaseQueue

* Recalculate during the next render

*/

if (newBaseQueueLast === null) {

newBaseQueueFirst = newBaseQueueLast = clone;

newBaseState = newState;

} else {

newBaseQueueLast = newBaseQueueLast.next = clone;

}

// Update the remaining priority in the queue.

// TODO: Don't need to accumulate this. Instead, we can remove

// renderLanes from the original lanes.

// Update the lanes of the WIP fiber; the next time render is executed, the key of the skipped updates will be recalculated

// completeWork will collect these lanes to the root (merging into the root happens in commitRootImpl), and then reschedule (ensureRootIsScheduled in commitRootImpl)

currentlyRenderingFiber.lanes = mergeLanes(

currentlyRenderingFiber.lanes,

updateLane,

);

// Mark the skipped lanes to workInProgressRootSkippedLanes

markSkippedUpdateLanes(updateLane);

} else {

// This update does have sufficient priority.

// In the case of sufficient priority, the update is executed

if (newBaseQueueLast !== null) {

/**

* If newBaseQueueLast is not null, it indicates that there are skipped updates

* The state calculation of the update may be related

* Therefore, once an update is skipped, it becomes the starting point,

* and all subsequent updates until the end are taken regardless of priority.

* (Similar to the processing logic of class components)

*/

const clone: Update<S, A> = {

// This update is going to be committed so we never want uncommit

// it. Using NoLane works because 0 is a subset of all bitmasks, so

// this will never be skipped by the check above.

lane: NoLane,

action: update.action,

hasEagerState: update.hasEagerState,

eagerState: update.eagerState,

next: (null: any),

};

newBaseQueueLast = newBaseQueueLast.next = clone;

}

// Process this update.

// Execute this update and calculate the new state

if (update.hasEagerState) {

// The state that has been computed will be marked to avoid repeated computation

// If this update is a state update (not a reducer) and was processed eagerly,

// we can use the eagerly computed state

newState = ((update.eagerState: any): S);

} else {

// Calculate the new state based on state and action

const action = update.action;

newState = reducer(newState, action);

}

}

update = update.next;

} while (update !== null && update !== first);

if (newBaseQueueLast === null) {

newBaseState = newState;

} else {

newBaseQueueLast.next = (newBaseQueueFirst: any);

}

// Mark that the fiber performed work, but only if the new state is

// different from the current state.

// If the === check fails, mark the current WIP fiber for updates, which will be collected to the root during completeWork

if (!is(newState, hook.memoizedState)) {

markWorkInProgressReceivedUpdate();

}

// Update the new data to the hook

hook.memoizedState = newState;

hook.baseState = newBaseState;

hook.baseQueue = newBaseQueueLast;

queue.lastRenderedState = newState;

}

// Interleaved updates are stored on a separate queue. We aren't going to

// process them during this render, but we do need to track which lanes

// are remaining.

/**

* Handle interleaved updates

* However, I decided to skip this analysis because I have no idea what kind of updates can be called interleaved updates

* There is a description of this in /react-reconciler/src/ReactFiberWorkLoop.old.js

* Received an update to a tree that's in the middle of rendering. Mark

that there was an interleaved update work on this root.

* However, this does not connect at all with the settings for queue.interleaved in enqueueConcurrentHookUpdate and

* enqueueConcurrentHookUpdateAndEagerlyBailout

* If anyone has knowledge in this area, please kindly guide me

*/

const lastInterleaved = queue.interleaved;

if (lastInterleaved !== null) {

let interleaved = lastInterleaved;

do {

const interleavedLane = interleaved.lane;

currentlyRenderingFiber.lanes = mergeLanes(

currentlyRenderingFiber.lanes,

interleavedLane,

);

markSkippedUpdateLanes(interleavedLane);

interleaved = ((interleaved: any).next: Update<S, A>);

} while (interleaved !== lastInterleaved);

} else if (baseQueue === null) {

// `queue.lanes` is used for entangling transitions. We can set it back to

// zero once the queue is empty.

queue.lanes = NoLanes;

}

const dispatch: Dispatch<A> = (queue.dispatch: any);

return [hook.memoizedState, dispatch];

}

Summary#

The update phase of useState will update the corresponding state through the update linked list generated by the dispatch function; however, not all updates will be updated. Only those whose priority (lane) belongs to the current renderLanes will be prioritized for computation, while skipped updates will be marked and updated during the next render.

Here is a simple example to prove this:

import { useEffect, useState, useRef, useCallback, useTransition } from "react";

function UseState() {

const dom = useRef(null);

const [number, setNumber] = useState(0);

const [, startTransition] = useTransition();

useEffect(() => {

const timeout1 = setTimeout(() => {

startTransition(() => { // Downgrade its priority to allow the update in timeout2 to interrupt

setNumber((preNumber) => preNumber + 1);

});

}, 500 )

const timeout2 = setTimeout(() => {

dom.current.click();

}, 505)

return () => {

clearTimeout(timeout1);

clearTimeout(timeout2);

}

}, []);

const clickHandle = useCallback(() => {

console.log('click');

setNumber(preNumber => preNumber + 2);

}, []);

return (

<div ref={dom} onClick={ clickHandle }>

{

Array.from(new Array(20000)).map((item, index) => <span key={index}>{ number }</span>)

}

</div>

)

}

export default UseState;

From the running results, since the update of timeout1 was downgraded by startTransition, the update of timeout2 was prioritized.

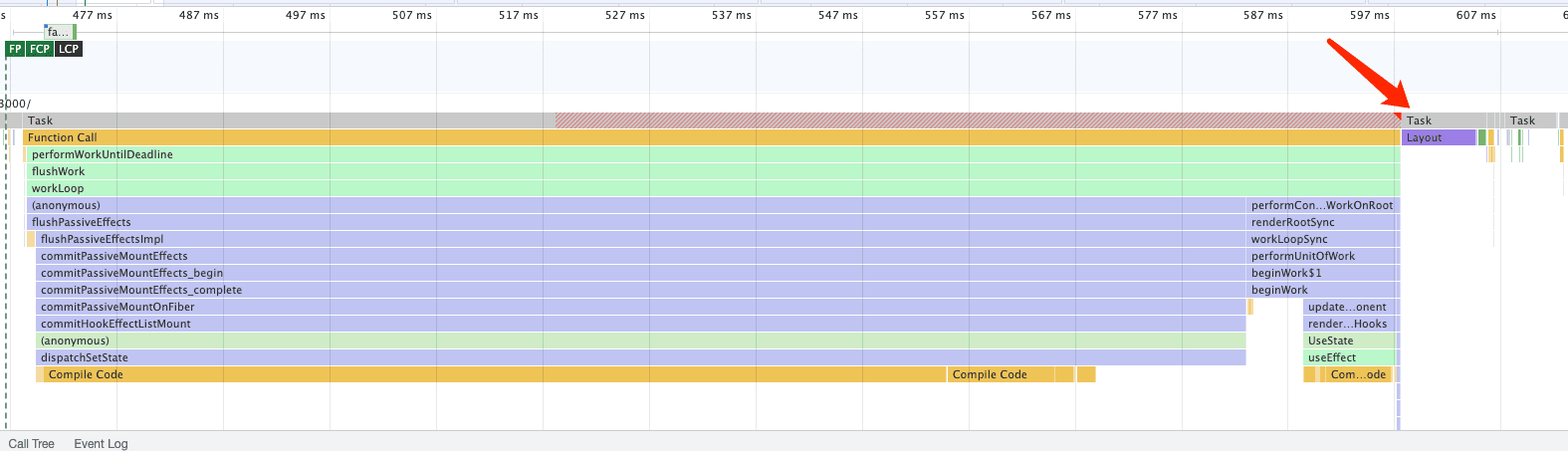

Batching#

Batching actually existed in v17, but v18 removed its limitations, resulting in automatic batching (annoying). For more details, you can check this article.

For example, if we run the following code (of course, the debugging environment is still v18):

import { useEffect, useState } from "react";

function UseState() {

const [num1, setNum1] = useState(1);

const [num2, setNum2] = useState(2);

useEffect(() => {

setNum1(11);

setNum2(22);

}, []);

return (

<>

<div>{ num1 }</div>

<div>{ num2 }</div>

</>

)

}

According to the analysis of dispatchSetState mentioned above, as long as there are updates that need to be rendered on the page, the update will call scheduleUpdateOnFiber to trigger rendering. In this example, two dispatch calls were made consecutively, which means two calls to scheduleUpdateOnFiber were triggered. So the question is—how many times did the page render?

Just once.

As for why, we need to look at what scheduleUpdateOnFiber does; it involves the entire update entry, so it is also a relatively important function.

This function mainly handles:

- Check for infinite updates — checkForNestedUpdates

- Mark the lanes of updates that need to be updated on root.pendingLanes — markRootUpdated

- Trigger ensureRootIsScheduled, entering the core function of task scheduling

scheduleUpdateOnFiber#

export function scheduleUpdateOnFiber(

root: FiberRoot,

fiber: Fiber,

lane: Lane,

eventTime: number,

) {

// Count the number of times the root synchronously re-renders without

// finishing. If there are too many, it indicates an infinite update loop.

checkForNestedUpdates();

// Mark that the root has a pending update.

markRootUpdated(root, lane, eventTime);

if (

(executionContext & RenderContext) !== NoLanes &&

root === workInProgressRoot

) {

/**

* Error handling, skip

*/

} else {

...

/**

* Important function! Triggering the core of task scheduling

* The logic we are looking for is also inside

*/

ensureRootIsScheduled(root, eventTime);

...

// The following is compatibility code for Legacy mode, not looking at it

}

}

At this point, we encounter another important function within React—ensureRootIsScheduled. Entering this function allows us to see the core logic of task scheduling within React (there is also some core logic in the Scheduler; you can check my article React Scheduler: Scheduler Source Code Analysis).

ensureRootIsScheduled#

function ensureRootIsScheduled(root: FiberRoot, currentTime: number) {

/**

* root.callbackNode is the task generated by the Scheduler during scheduling,

* and this value will be returned by React when calling scheduleCallback and assigned to root.callbackNode

* From the variable name existingCallbackNode, it is easy to infer that this variable is used to indicate an already scheduled task (old task)

*/

const existingCallbackNode = root.callbackNode;

// Check if any lanes are being starved by other work. If so, mark them as

// expired so we know to work on those next.

/**

* This is to check if there are any tasks waiting that have expired (for example, some high-priority tasks keep interrupting,

* causing low-priority tasks to be unable to execute and expire). If there are expired tasks, mark their lanes to

* expiredLanes, so React can immediately call them with synchronous priority

*/

markStarvedLanesAsExpired(root, currentTime);

// Determine the next lanes to work on, and their priority.

// Get renderLanes

const nextLanes = getNextLanes(

root,

root === workInProgressRoot ? workInProgressRootRenderLanes : NoLanes,

);

/**

* If renderLanes is empty, it means there is no need to start scheduling, exit

*/

if (nextLanes === NoLanes) {

// Special case: There's nothing to work on.

if (existingCallbackNode !== null) {

cancelCallback(existingCallbackNode);

}

root.callbackNode = null;

root.callbackPriority = NoLane;

return;

}

// We use the highest priority lane to represent the priority of the callback.

/**

* Generate the priority of the task corresponding to the highest priority in renderLanes

*/

const newCallbackPriority = getHighestPriorityLane(nextLanes);

// Check if there's an existing task. We may be able to reuse it.

/**

* Get the priority of the old task

*/

const existingCallbackPriority = root.callbackPriority;

/**

* If the priorities of the two tasks are the same, exit directly, as there is already a task that can be reused

* This is also where batching is implemented

*/

if (

existingCallbackPriority === newCallbackPriority

) {

// The priority hasn't changed. We can reuse the existing task. Exit.

return;

}

/**

* Interruption logic

* The logic entering here can be considered to have a higher priority than the old task, so it needs to be rescheduled

* Therefore, the old task is no longer necessary to be called, so it can be canceled (the cancellation logic can be seen in the Scheduler)

*/

if (existingCallbackNode != null) {

// Cancel the existing callback. We'll schedule a new one below.

cancelCallback(existingCallbackNode);

}

// Schedule a new task

let newCallbackNode;

if (newCallbackPriority === SyncLane) {

// Special case: Sync React callbacks are scheduled on a special

// internal queue

/**

* Synchronous priority means this may be an expired task or the current mode is non-concurrent

*/

if (root.tag === LegacyRoot) {

scheduleLegacySyncCallback(performSyncWorkOnRoot.bind(null, root));

} else {

scheduleSyncCallback(performSyncWorkOnRoot.bind(null, root));

}

if (supportsMicrotasks) {

// Flush the queue in a microtask.

/**

* If the browser supports microtasks, put performSyncWorkOnRoot into the microtask

* This way it can be executed faster

* An event loop follows the order of an old MacroTask → clearing microtasks → rendering the page by the browser

* Otherwise, entering the Scheduler, the tasks processed by MessageChannel are all MacroTasks, which will render in the next

* event loop

*/

scheduleMicrotask(() => {

if (

(executionContext & (RenderContext | CommitContext)) ===

NoContext

) {

/**

* Here, the scheduled task above will be canceled and performSyncWorkOnRoot will be called

*/

flushSyncCallbacks();

}

});

} else {

// Flush the queue in an Immediate task.

scheduleCallback(ImmediateSchedulerPriority, flushSyncCallbacks);

}

newCallbackNode = null;

} else {

// Handling logic for concurrent mode

let schedulerPriorityLevel;

// Convert renderLanes to scheduling priority

switch (lanesToEventPriority(nextLanes)) {

case DiscreteEventPriority:

schedulerPriorityLevel = ImmediateSchedulerPriority;

break;

case ContinuousEventPriority:

schedulerPriorityLevel = UserBlockingSchedulerPriority;

break;

case DefaultEventPriority:

schedulerPriorityLevel = NormalSchedulerPriority;

break;

case IdleEventPriority:

schedulerPriorityLevel = IdleSchedulerPriority;

break;

default:

schedulerPriorityLevel = NormalSchedulerPriority;

break;

}

/**

* Use the corresponding priority to schedule React's task

*/

newCallbackNode = scheduleCallback(

schedulerPriorityLevel,

performConcurrentWorkOnRoot.bind(null, root),

);

}

// Update the task priority and task on the root so that the next time scheduling is initiated, it can be retrieved

// The oldTask used at the beginning

root.callbackPriority = newCallbackPriority;

root.callbackNode = newCallbackNode;

}

After analyzing ensureRootIsScheduled, we have a general understanding of how batching is implemented; its implementation is actually the reuse of tasks with the same priority within React, allowing multiple dispatch calls to hooks but rendering only once.

However, with this combined with React Scheduler: Scheduler Source Code Analysis, we have covered all three major modules within React (scheduling → coordination → rendering), and now let me pose a question to tie the entire article together.

What does React do from triggering the dispatch of useState to rendering on the page—scheduling edition?

- Generate a corresponding priority update instance and mount it to the queue property on the hooks instance corresponding to useState in a circular linked list.

- If the current update is determined to need rendering on the page, the lane of this update will be marked from the fiber node where the update originated, layer by layer to its parent fiber's childLanes, until the root.

- Subsequently, scheduleUpdateOnFiber will be called to enter the scheduling phase.

- First, mark the lanes of the current update on root.pendingLanes; the pendingLanes can be viewed as all tasks pending updates.

- Then take the highest priority from pendingLanes as the current renderLanes.

- Before using the Scheduler to register the scheduling task, check if there are already registered but not yet executed tasks (root.callbackNode); if there are tasks, compare the priority of that task with renderLanes.

- If the task priority is the same, reuse that task;

- If renderLanes is higher, cancel the old task (root.callbackNode) and re-register the task in the Scheduler, assigning the returned task to root.callbackNode.